Optimisations

Quest 2 is coming. More powerful!

When I read specs for Quest (1), I thought that it's not going to be that much different than PC. It has 8 cores, right? Oh, 4 are weaker? That's still 8, let's say, 6. Oh, 4 are used for tracking? That's still free more powerful cores. No? One is for the system? Well, still 3. There's nothing else running, right? Oh, and the GPU. Its speed is just maybe half of my 970?

How naive was I.

When I run the game, it turned out, it will require lots of work and additional features that the PC version did not require.

I am going to cover things that I did to make Quest version work reasonably well. Some of these are general ideas, some heavily depend on my approach/engine.

GPU

- Less detailed meshes. I use procedural generation so this comes almost for free. It's almost as I have a magical slider "amount of details".

- Simpler shaders. I have to admit, I've gone a bit crazy with stuff in the shaders. Voxels, sort-of-PBR, background calculated on the fly. For Quest, I dropped some of the effects (speculars, simpler background for reflections), simplified some calculations (for characters fog values are calculated per character, not per pixel), etc.

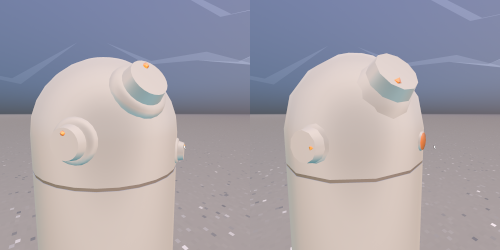

On the left side is PC version of the shader. You can see sun's and sky's fuzzy reflections. On the right side is Quest. Reflecting simplified version of background without sun. - Even simpler shaders. Because the textures I use are not real textures, they're more like material lookup, I decided to store values in vertex data. This way I don't have varied look of some of the materials depending on the angle you look at the surface. But it's a bit of GPU power saved.

- Level Of Detail system. If a mesh is rendered further from the camera, the less screen space it takes and it can use fewer details. I didn't have that for PCVR, for Quest it helps with getting 100k triangle scenes much below 70k.

- Foveated rendering. With this feature on, the parts of the view closer to the edge are less detailed - instead of calculating 4 pixels, just 1 gets calculated. It's visible a bit but if you are not actively looking at it, most of the time you should not notice.

- Because of the foveated rendering, there's no post-process. And it's not an issue right? Well... I use lots of gradients. Without any additional processing, you will see the boundaries of each level of colour. On PC whole thing was rendered to a texture of a greater amount of bits per colour and a post-process was applied to add a bit of noise. On Quest, this is done per pixel when rendering stuff.

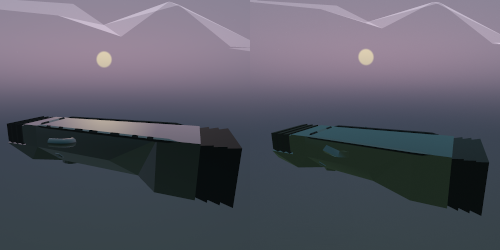

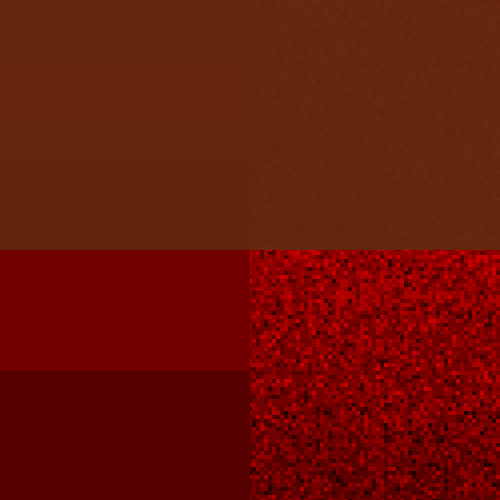

Without anti-banding on the left side. With anti-banding on the right side. Top row is actual output. Bottom is enchanced. The thing is that on screen it might be not so apparent as it is in VR. In VR it looks really flat. - Order of rendering. To save GPU power, it's good to avoid doing calculations that will be wasted because a computed pixel is going to get covered by another pixel. To avoid that, render the closest meshes first.

CPU

I normal conditions, I used 1 thread/core for rendering and others for gameplay tasks. When a level was starting, I was using one of those additional cores/threads to generate a level. After that was done, all available cores were free for the gameplay code. And initially, there were lots of hiccups at the beginning of the level. That was kind of okay, as it was happening just for a few seconds. WAS. Right now the game is changing into an open-world and level generation takes place much more often, sometimes during intense moments. You don't want to encounter lag then. I had to do lots of things to be able to have, what I wanted. Seamless open-world, no loading screens.

I started with two cores occupied all the time. And had to get to using one core top. To still have some room for some extra expensive things that may happen randomly.

- Because of the way I did multithreading, I have to manually distribute tasks. My recommendation is to not do that, create jobs/threads and let OS do the management. But as I already had the system, I discovered that for a few ms the renderer thread is waiting for the last frame to finish. That was some time that could be used to help the gameplay code. Before taking a job to do, rendered thread checks how much time is left to the expected end of rendering. And if it's enough, does a job.

- I learned that while I thought I have 3 cores, I can't just hog them. Which I initially did. Operating System was still using them for some tasks (like time-warping). The game lagged and I switched to using 2 cores. Until I thought that I may just use lower priority for some of the threads to allow Operating System to do stuff that is required to be done immediately. I was back at 3 cores.

- There were lots of characters that were far away, not visible and they were doing their stuff continuously. I decided to have a suspend characters that are far away.

- I didn't want characters to go around the corner and stay there frozen, so the distance to suspend them is larger. But the characters behind a corner don't have to be ticked every frame, right? So they don't. The further they are, the more frames they skip.

This is what actually happens behind the corner. - Also, some heavier calculations are done per few frames - like collisions/gradient checks. This was required because I have my own physics system (portals!) and some of the stuff could be still optimised. But for the same reason, as I use soft physics for characters, if someone moves into a wall, physics will just gently push it away from the wall.

- Speaking of collisions. Lots of models were too complicated. Or there were dozens of things that you couldn't even get to, to move on them. Simplifying assets that use too much of GPU or CPU might be sometimes easier than doing some general solution.

- Impossible spaces require a bit different approach when it comes to AI. AI just can't move in a straight line towards the player. It has to know the path through portals. And when I started to work on that, I thought that it may also see through portals. And then I decided to make it even more complicated and store the last known position of the enemy, so the AI has its own perception. With such an approach, you can sneak on the enemy from behind and push it into a chasm. This also means that sometimes if you duck and stay hidden, you may confuse the enemies - they will go to the last location they think you are only to discover you're no longer there. Fancy, right? But it comes at a cost. As well as some other stuff that AI does. That's why AI that is not visible, tends to skip frames.

- And as I had such a system working, I decided to use the same frame skipping mechanism for other things.

- When I was almost where I wanted to be, I realised that background tasks take a lot of time. Sometimes those tasks require to modify the game world (to put a new object in) or do something that can't be done at any time, as it may use incomplete data, etc. I needed a specific window of time during game-frame to do some short tasks - sync(hronised) jobs. I discovered that background tasks spend lots of time waiting for those windows of opportunity. I changed that to allow doing synchronised jobs when everything else does not need world data.

- While I was inspecting game-frame, I noticed that there are some things happening that maybe don't take lots of time, but they're not doing anything useful. Like each door/portal checking if it should open or close. Not minding that almost all doors/portals are just open passages. Identifying such objects and jobs saved a bit of the time too.

And that's all I think. At least when it comes to things that I already did. There are still things that require optimisation. Or breaking into multiple frames. Or just doing less of them. Etc.

If I had to cumulate all of that into a very short list of suggestions, it would look something like this:

- Allow skipping doing things that are not visible.

- Check if there are things done every frame that might be done every few frames or that don't do anything anyway - drop/skip them.

- Check if some of the bottlenecks come from particular assets - fix those assets.

- It's better to have a slightly worse looking game but running smoothly. Especially when it comes to VR.

What am I working on now? Implemented an open-world. The freedom you have with where you want to go to works quite well. Especially with the new gameplay loop that I am working on right now. It's getting simpler, more focused on what you're doing at the moment than "oh, I need to grind to unlock stuff" (which I initially wanted to avoid but implemented exactly opposite what I didn't want to).

If you want to check it out, there's a preview build available (join my discord server "void room" and check out "preview-builds" channel). And before I publish an official update with open-world and new gameplay loop, I will write about implementing open-world with impossible spaces for VR.

Get Tea For God

Tea For God

vr roguelite using impossible spaces / euclidean orbifold

| Status | In development |

| Author | void room |

| Genre | Shooter |

| Tags | euclidean-orbifold, impossible-spaces, Oculus Quest, Oculus Rift, Procedural Generation, Roguelite, Virtual Reality (VR) |

| Languages | English |

More posts

- performance of a custom engine on a standalone vr headsetMar 27, 2023

- "Beneath", health system and AI changesFeb 16, 2023

- vr anchors and elevatorsJan 16, 2023

- v 0.8.0 new difficulty setup, experience mode, new font, performance updateDec 15, 2022

- performanceDec 02, 2022

- getting ready for demo udpate, scourer improvementsNov 15, 2022

- new difficulty + insight upgradeOct 31, 2022

- release delayOct 20, 2022

- loading times and early optimisationOct 17, 2022

Comments

Log in with itch.io to leave a comment.

very interesting to read the optimizations; i hope you can keep working on this project

Thanks :) I work on this project and I want to finish it and release it.

Really great read. I love your LOD gif of the red robot

Great post and thank you so much for going through the effort! This is my favorite game to play on the quest. Real exploration! I hope you'll be able to support it still as the Quest2 gets out in the world (I know that's a big request though). Looking forward to the next update!

You should enjoy the next update as it introduces an open world (and simplifies the core gameplay loop). When I settle with the gameplay, I plan to start adding more content :)