things I learned about foveated rendering while failing to implement OpenXR

Recently I decided to implement OpenXR support. It all went quite well until I realised that foveated rendering does not work. I couldn't make it work at all. Even the sample was not working.

I decided to go a bit deeper and setup foveated rendering manually using OpenGL extension. That's where the things started to get really interesting to me.

But first. What's foveated rendering? In general, it is an approach to render some parts of the view with more details, some with less. This is handled by adjusting resolution of render target. And I learned that also by disabling MSAA (a feature that makes edges smooth).

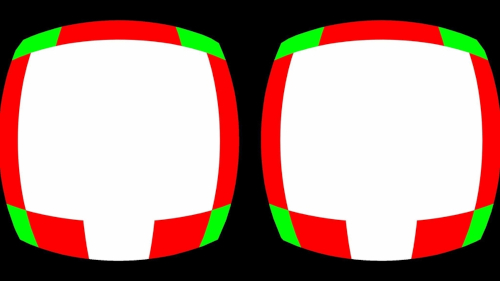

This is especially useful for VR that the larger FOV is, the more distorted are the parts further from the centre. You have to render a lot for something that will be then squished and also barely visible.

Foveated rendering is used to sort of distribute GPU power evenly - have more of it for the centre, less closer to the edges.

Quest has Qualcomm processor that uses tiled rendering - when rendering something, the render target is divided into rectangular areas. There's much more to that but this is all we have to know now.

The way foveated rendering works on Quest is that the final image is also divided into rectangles of varying foveation level. Also, there's an option to adjust foveation level. It can be low, medium, high. VRAPI also allows to set it to "highest" which maybe works for OpenXR but I couldn't make foveated rendering to work at all. At least through OpenXR.

Because when I implemented my own support for the feature, it worked. But then, I realised that I have to setup tile layout, so I have more details in the centre, less closer to the edges. I though that it might be as easy as copy what VRAPI sets.

Well...

Not really.

Because what I had actually seen was different to what I expected. I expected something like this:

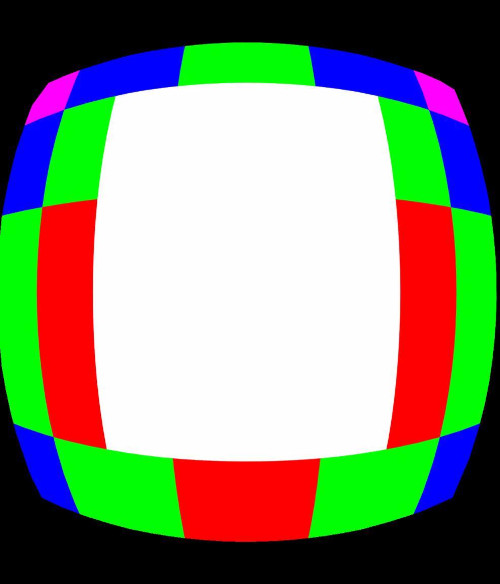

Starting with level 0: white (0), red (1), green (2), blue (3), magenta (4)

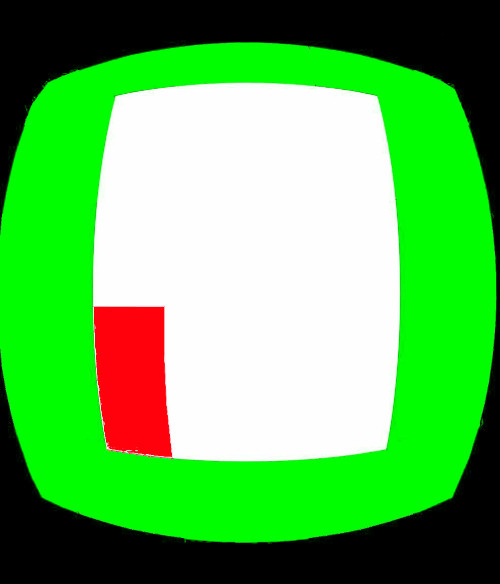

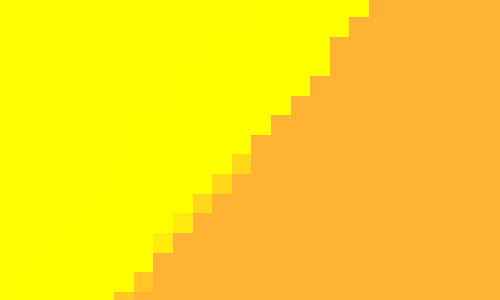

But it was more like this:

And then I realised that the render target resolution affects tile layout as well.

"I may need to set it on my own".

The way, foveated rendering is setup via OpenGL extension is that you provide focal points. Each describes so called pixel density. And the final pixel density is the highest value from all focal points.

Each focal point is described with location, fallout/strength and radius at which it starts to lower the density.

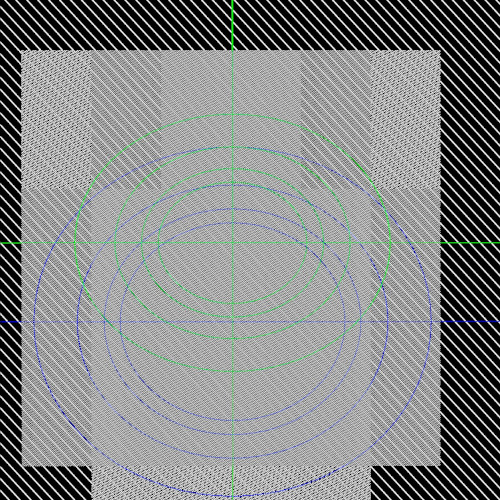

Doing it by hand felt like playing a game "what number am I thinking of" and it was until I decided to visualise focal points, I learned how fragile the system is. Even small change to the resolution, small change to focal point's parameters may result in switching foveation level/pixel density for a tile. But making it with such a visualisation was much easier than guessing what setup will work.

Grey-scale patterns make it easy to implement and distinguish various foveation levels (although some details are lost due to rescaled image).

There are a few things that I learned.

First, is that each level doesn't mean that each pixel is uniformly bigger. It alternates scale, so at a higher level/lower density, only one dimension goes up.

Second, that level 1 (100% pixel density) and level 2 (50% pixel density) have the same resolution but they differ when it comes to other settings. For sure MSAA gets disabled, I don't know what else.

Third, the rectangles may have different size, especially when it comes to the edges.

I also noticed that by default, Quest has a minimum pixel density. This means that the images won't get any more blurred than a certain amount (1x2 no MSAA)

This is where I get a bit crazy with adding extra foveated rendering levels (setups) and adding maximum foveation level, so everyone can tweak values to their preference.

I don't mind having more blurred image at the edges of my view but some people may not want that -> lower maximum foveation level.

For long time the game was running in 110% scale which meant that it required more GPU power. This let me to change foveated rendering to have more details in the centre.

I also created a spreadsheet where I put all the patterns and relative GPU power needed to render them. That sort of feels a bit like guessing as I can't really tell how much difference there will be between MSAA and no MSAA as it only affects edges. Compared these values alone (assuming the scale went from 110% to 100%) it seemed it should work better. Playing the actual game turned out it indeed is working better.

That's why I will keep my approach to foveated rendering.

I have still no idea why OpenXR's implementation does not work. I mean, using RenderDoc, I learned that it doesn't even setup foveated rendering to the requested level.

At the same time, I have no idea why reset view (hold system menu button) recenters guardian data. For this one, I decided to reinitialise OpenXR which works and is "did you try turning it off and on" joke working in real life.

It took me more time than I anticipated but I also did smooth locomotion and a few other things.

Now? I am working on game content and I want to drop a picture of what am I working on now and then.

Get Tea For God

Tea For God

vr roguelite using impossible spaces / euclidean orbifold

| Status | In development |

| Author | void room |

| Genre | Shooter |

| Tags | euclidean-orbifold, impossible-spaces, Oculus Quest, Oculus Rift, Procedural Generation, Roguelite, Virtual Reality (VR) |

| Languages | English |

More posts

- performance of a custom engine on a standalone vr headsetMar 27, 2023

- "Beneath", health system and AI changesFeb 16, 2023

- vr anchors and elevatorsJan 16, 2023

- v 0.8.0 new difficulty setup, experience mode, new font, performance updateDec 15, 2022

- performanceDec 02, 2022

- getting ready for demo udpate, scourer improvementsNov 15, 2022

- new difficulty + insight upgradeOct 31, 2022

- release delayOct 20, 2022

- loading times and early optimisationOct 17, 2022

Comments

Log in with itch.io to leave a comment.

Pure gold. Thanks for the technical deep dive. Sadly I definitely don't have the expertise to roll out my own solution as you have done. Is it something you would consider putting up on github?

There's really not too much more than is in the extension's sample (https://www.khronos.org/registry/OpenGL/extensions/QCOM/QCOM_texture_foveated.tx...) One odd thing I do is that I send the setup for a few frames, as I noticed that Oculus changes it. I could be wrong and it might be unnecessary but as it works, I keep it.

I would need to either modify an existing sample. Although I would still need to put it into Visual Studio project) or create a new one as how it is done in my engine, requires lots of other steps (like loading config files, checking for various things, there are implementations of multiple VR systems.

The easiest way to test it by yourself is to get OpenXR from Oculus https://developer.oculus.com/documentation/native/android/mobile-openxr/ get XrCompositor_NativeActivity sample, go to ovrRenderer_RenderFrame and setup foveated rendering before ovrFramebuffer_SetCurrent(frameBuffer); using (1) and (3) samples from extension's specs, just replace foveatedTexture with frameBuffer->ColorSwapChainImage[frameBuffer->TextureSwapChainIndex].image, it should look something like that:

You may need to add this as well somewhere before

#define GL_FOVEATION_ENABLE_BIT_QCOM 0x01 #define GL_FOVEATION_SCALED_BIN_METHOD_BIT_QCOM 0x02 #define GL_TEXTURE_FOVEATED_FEATURE_BITS_QCOM 0x8BFB #define GL_TEXTURE_FOVEATED_FEATURE_QUERY_QCOM 0x8BFD #define GL_FRAMEBUFFER_INCOMPLETE_FOVEATION_QCOM 0x8BFF #define GL_TEXTURE_FOVEATED_MIN_PIXEL_DENSITY_QCOM 0x8BFC #define GL_TEXTURE_FOVEATED_NUM_FOCAL_POINTS_QUERY_QCOM 0x8BFE typedef void (GL_APIENTRY* PFNGLTEXTUREFOVEATIONPARAMETERSQCOMPROC) (GLint texture, GLint layer, GLint focalPoint, GLfloat focalX, GLfloat focalY, GLfloat gainX, GLfloat gainY, GLfloat foveaArea); // this in some init function PFNGLTEXTUREFOVEATIONPARAMETERSQCOMPROC glTextureFoveationParametersQCOM = (VideoGLExtensions::PFNGLTEXTUREFOVEATIONPARAMETERSQCOMPROC)glGetProcAddress("glTextureFoveationParametersQCOM");This will only work if opengl/es supports this extension (Quest, some Android devices).

Thank you! Way out of my depth here, but that may be a good way to learn to swim...

So cool, I was just wondering whatever happened to foveated rendering in VR and saw this!! :D

It's used in many games :). Fixed Foveated Rendering, as without eye-tracking.

Do you think eye-tracked foveated rendering is on the horizon, and that it will be as good as it sounds? I remember "DeepRender" from Oculus Connect 5, which required rendering only 5% of pixels on each display, but that was years ago. I haven't heard about it since, as well as all the other fancy features they talked about that, for the most part, seem to have disappeared and weren't mentioned in the Cambria presentation. 😅

I have no idea. With GPU similar to what Quest has at the moment, with breaking rendering into such big tiles, it might be unusable but then - if the area is big enough, you may not be able to notice, as peripheral vision is quite blurry and wherever you look, you should get only the sharpest image.

There were some companies that did eye-tracking stuff for Quest. It should be fairly easy to test foveated rendering if one has access to this hardware.